AI tools enable developers to generate, refactor, and review code faster—but they also change how governance and compliance risk enter the SDLC.

When AI-generated code, prompts, and tool usage are not linked to specific developers, organizations lose visibility into policy violations, licensing exposure, and regulatory risk.

AI code governance ensures AI-assisted development remains accountable, auditable, and aligned with organizational and regulatory expectations.

Organizations focused on AI code governance must address risks such as:

Policy and Licensing Violations

AI-generated code may violate open-source licenses, intellectual property rules, or internal development policies when usage is not governed.Regulatory and Compliance Gaps

AI-assisted development may bypass required controls without clear oversight and auditability.Data Exposure and Confidentiality Risk

Sensitive information may be exposed through AI prompts or embedded in AI-generated code.Unattributed AI Usage

When AI contributions are not linked to developers, governance enforcement and remediation break down.

The risks associated with generative AI tools are not hypothetical. Public incidents have shown that unmanaged AI usage can result in licensing violations, data exposure, and regulatory risk—reinforcing the need for strong AI code governance frameworks:

GitHub Copilot Licensing Violation (2023): An AI-powered coding assistant generated code matching 1% of public repository snippets, including GPL-licensed code. Companies faced legal disputes and the potential forced open-sourcing of proprietary projects due to inadvertent license violations.

Samsung Data Leak via ChatGPT (2023): In May 2023, Samsung employees accidentally exposed confidential data while using ChatGPT to review internal documents and code. As a result, the company banned generative AI tools to prevent future breaches.

Amazon on Sharing Confidential Information with ChatGPT (2023): Amazon issued a warning to employees about sharing confidential information with generative AI platforms like ChatGPT. The company emphasized the need to avoid disclosing sensitive business data to AI models to prevent potential data breaches. This warning followed concerns that generative AI tools might inadvertently expose proprietary or confidential business data.

While many organizations embrace AI for code generation, most are unaware of the associated risks. Archipelo supports AI code governance by making AI-assisted development observable—linking AI tool usage, AI-generated code, and governance risk to developer identity and actions across the SDLC.

How Archipelo Supports AI Code Governance

AI Code Usage & Risk Monitor

Monitor AI tool usage and correlate AI-generated code with governance, compliance, and security risks.Developer Vulnerability Attribution

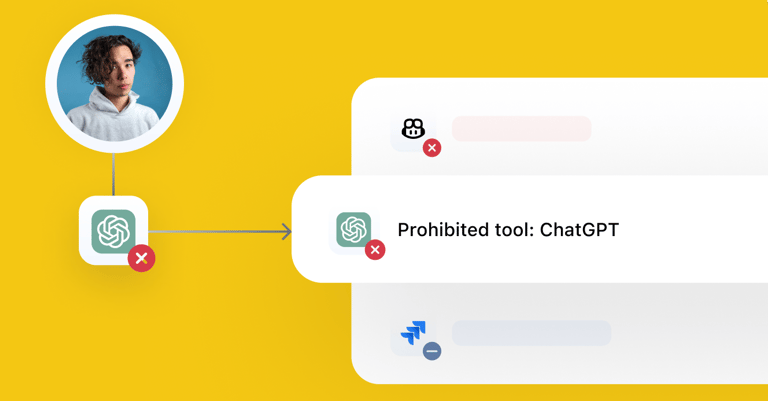

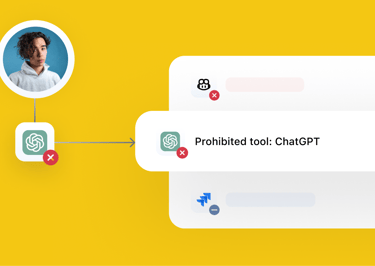

Link risks introduced through AI-assisted development to the developers and AI agents involved.Automated Developer & CI/CD Tool Governance

Inventory and govern AI tools, IDE extensions, and CI/CD integrations to mitigate unapproved or non-compliant AI usage.Developer Security Posture

Generate insights into how AI-assisted development impacts individual and team governance posture over time.

AI-assisted development requires governance, attribution, and accountability to remain sustainable at scale.

AI code governance enables organizations to innovate responsibly while reducing legal, regulatory, and security exposure across the SDLC.

Archipelo delivers developer-level visibility and actionable insights to help organizations reduce AI-related governance risk across the SDLC.

Contact us to learn how Archipelo supports responsible AI-assisted development while aligning with governance, compliance, and DevSecOps principles.